This web page is created within BALTICS project funded from the European Union’s Horizon2020 Research and Innovation Programme under grant agreement No.692257.

Experience

Large-scale numerical physical field calculations are one of the features of the last 50 years, which has been made possible by the rapid progress of computing technologies. The objective need for such calculations is the production, the development of new products, and the characterization of natural sciences, including climate, and even cosmological scale structures.

Numerical methods and their implementation in software products are rapidly evolving into modern production trends such as material economics, automated design, consumer product culture dictated by a quick product life cycle, cost of experimental development, and for other reasons.

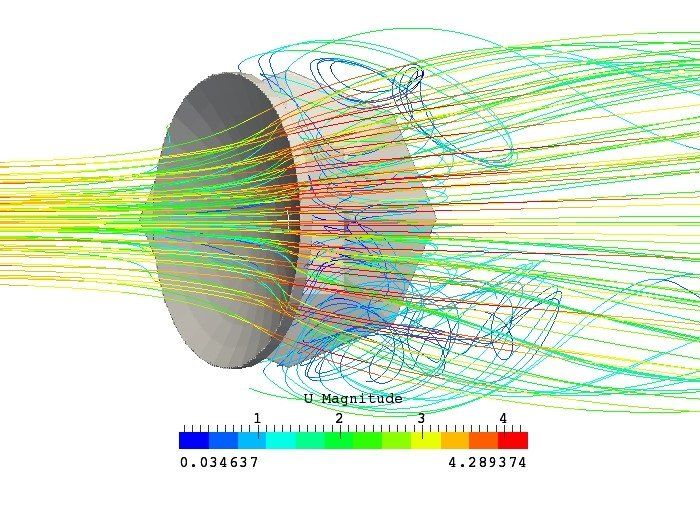

Computer Fluid Dynamics and mechanical calculations are gaining ever more influence in the design and optimization of new products, allowing to save on financial, raw materials, and time resources. The creation of new products in today’s industry is almost unthinkable without accompanying numerical simulations.

Solid body deformation and load calculations, fatigue load and fracture mechanics, heat and mass exchange in liquid and gaseous environments as well as solid body interaction with liquid environment and calculations of electromagnetic field are now engineering physics problems that are addressed by many scientific institutions, manufacturers, and engineering consultants who usually cluster around major industrial centres. The industry’s popularity is based on the diversity of tasks to be addressed, the wide spectrum of physical phenomena involved, the methods used and the complexity of the problems.

Solving physical field problems

Nowadays, to solve problems of physical field finding, software packages that have basic algorithms are used. Over the years, these packages are growing, therefore, reaching the equivalent of hundreds of programmers and professionals labour input each year and more.

Compared to the 70-90s of the last century, relatively few such packages are currently being developed by individuals or relatively small groups of people. Nowadays, there are usually software-based research packages that are not generic but intended for a particular purpose, such as the description of specific physical phenomena or geometry, and the creation and development of new numerical methods. Software packages can be commercial and free and/or open source.

Commercial software is often available with a wider set of standard tools, therefore, it is usually more convenient for typical and immediate industrial applications. Open source software allows you to add packet code, allowing you to do research in algorithms, numerical and physical models as well as allowing you to solve specific tasks.

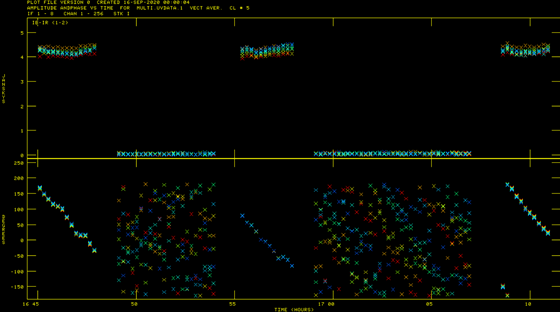

Processing of radio astronomical data

Since 2010 ERI VIRAC scientific staff performs tasks related to radio astronomical data processing in various projects, including ERDF program project “Earth’s near-field radio-astronomical research” and 7th Framework Program “NEXPReS”, but data recorders have changed over time. Their recording formats, staff experience and competence have increased, thus enabling the two existing data processing correlators to be upgraded and adapted to specific project tasks (KANA – developed in VIRAC and developed for Earth Observation Data Processing, and SFXC developed in JIVE and designed for Earth’s distant object observation data processing). It should be noted that each correlator is used for different work tasks and each has a set of procedures before processing.

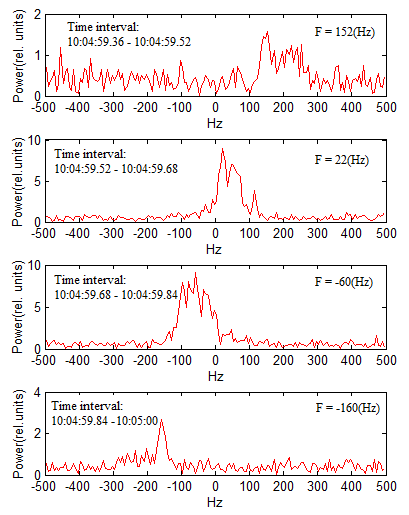

KANA Correlator has been created in 2012 in the HPC department during the implementation of the project “Earth’s near-field radio-astronomical research” and it is intended for processing of Near Earth object data, as it ensures corrections of the observed object frequency and time shift. Time offset compensation is required to cross-correlate signals received at two stations, as the received signal at each station varies at different times, depending on the location of the station on Earth and in response to the object being monitored. Frequency offset compensation is required because Earth-based objects move in relation to the Earth and a Doppler offset that needs to be compensated before cross-correlation of two signals.

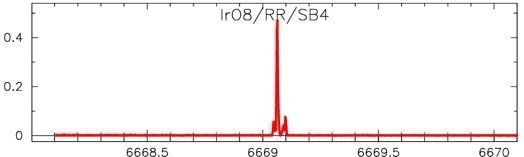

Since 2017, the VIRAC staff, in cooperation with the Department of Radio Astronomy of Nicolaus Copernicus University, has made a number of VLBI observations, which were planned and processed by the VIRAC staff. The implementation of these observations provided new and important knowledge in VLBI data processing. Due to the acquired knowledge, on May 2, 2019, the observation of the RT32 - RT16 in interferometry mode was successfully performed and the results of data processing were published.

Previous experience allowed to plan and perform three antenna interferometer observations on June 4 and 5, 2019. Irbene RT32 (Latvia), Toruń RT4 (Poland) and Onsala O8 (Sweden) stations were included in the several hour observation.The processing of the performed observation data allowed to gain knowledge of the equivalent observation data processing in the EVN network, thus confirming the ability of the VIRAC specialists to perform large-scale observation data processing.

As a result of the implementation of the above observations and the processing interferometry data, the new collaboration was started within the Precise project, with the goal to investigate Fast radio bursts. In the Precise Project Several EVN stations participated and part of the observation processing was carried out using VIRAC clusters, thus confirming the ability of VIRAC staff to process observation data in large and significant international projects.

In 2020, research within the local interferometer continues. In collaboration with Yamaguchi University (Japan) and North-West University (South Africa), VIRAC staff were involved in observation and data processing, enhancing the competence of VIRAC staff in VLBI data processing.

Different types of numerical problems require different computing power. Typically, small amounts of design with corresponding numerical calculations may be sufficient for medium office computer resources, while modelling gas/liquid flow around large objects (such as bridges or ships) may require the use of significantly more expensive computing devices with extended memory and larger kernels.

It is necessary to take into account the need for high-performance computing cluster[1] when it comes to interest in fluid-structure interaction (for example, bridge rolling in the wind[2], plane wing vibrations[3]) or multiphase environmental calculations (oil discharge in the event of a ship’s accident[4], chemical reactions[5], fuel injection in the car engine cylinder[6], etc.) that is a multi-device (10-100) specialized computing device, totalling several hundreds of kernels.

Finally, challenges such as global climate modelling[7], fundamental research in turbulence[8], biology – brain modelling[9], etc. may require a supercomputer with several hundreds of thousands of kernels and a power consumption of tens of megawatts.

VIRAC research and other activities are planned at the middle – HPC cluster level, using the knowledge of Ventspils University of Applied Sciences staff in mechanics and continuous environmental physics. The direction of high-performance computing is based on the experience of research and development projects.

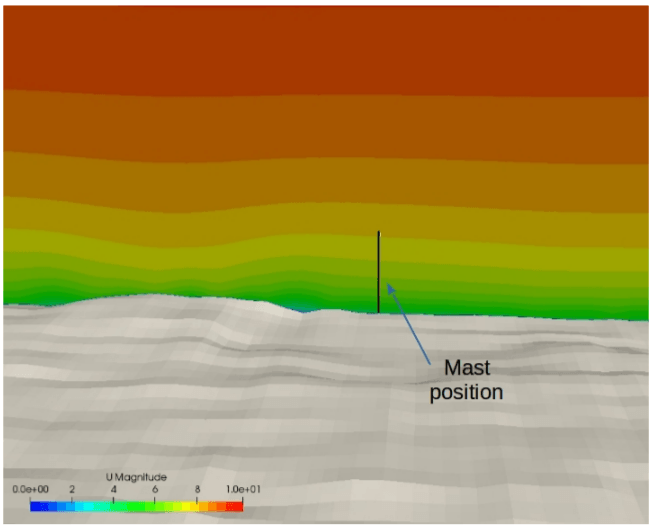

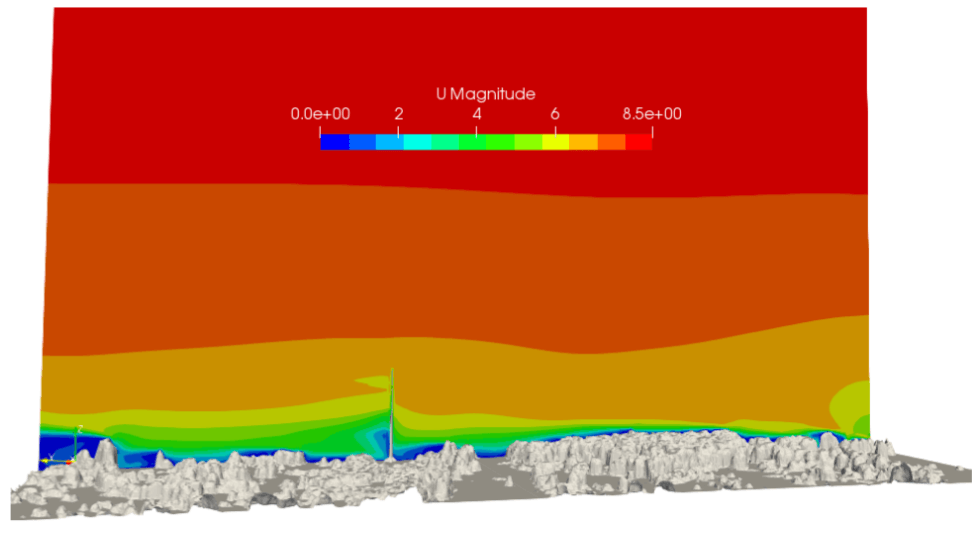

Numerical modelling has been done in support of the local industry (several small calculations of the vertical axis wind generator hydrodynamics and solid state mechanics), as well as studies on Irbene radio telescope complex infrastructure, such as calculations of RT-16 and RT-32 antenna deformations, compared with the tenzogiver readings for the design quality control.

In addition to these activities, the VIRAC High-Performance Computing department has close links with the Paul Scherrer Institute in Switzerland, whose infrastructure – proton beam targets, etc. object calculations are done with the help of the VIRAC High-Performance Computing department.

Similarly, the VIRAC High-Performance Computing department has supported the Crystal Growth Calculation Group of the Department of Environmental Physics of the University of Latvia with numerical hydrodynamic calculations.

References:

- HPC – High Performance Computing

- Billah, K.Y., Scanlan, R.H., Resonance, Tacoma Narrows bridge failure, and undergraduate physics texbooks. Am. J. Phys., 59 (2), pp. 118-124, 1991.

- Schewe, G., Flow-induced vibrations and the Landau equation. Journal of Fluids and Structures, 43, pp. 256-270, 2013.

- Vethamony, P., et al., Trajectory of an oil spill off Goa, eastern Arabian Sea: Field observations and simulations. Environmental Pollution, 148 (2), pp. 438-444, 2007.

- [Santana, S.H., Numerical simulation of mixing and reaction of Jatropha curcas oil and ethanol for synthesis of biodiesel in micromixers. Chemical Engineering Science, 132, pp. 159-168, 2015.

- Baratta, M., Rapetto, N., Fluid-dynamic and numerical aspects in the simulation of direct CNG injection in spark-ignition engines. Computer & Fluids, 103, pp. 215-233, 2014.

- Vital, J.A., et al., High-performance computing for climate change impact studies with the Pasture Simulation model. Computers and Electronics in Agriculture, 98, pp. 131-135, 2013.

- Borrell, G., et al., A code for direct numerical simulation of turbulent boundary layers at high Reynolds numbers in GB/P supercomputers. Computers & Fluids, 80 (10), pp. 37-43, 2013.

- Matsumoto, Y., et al., Toward the multi-scale simulation for a human body using the next-generation supercomputer. Procedia IUTAM, 10, pp. 193-200, 2014